Improving Image De-raining Models Using Reference-guided Transformers

Abstract

Image de-raining is a critical task in computer vision to improve visibility and enhance the robustness of outdoor vision systems. While recent advances in de-raining methods have achieved remarkable performance, the challenge remains to produce high-quality and visually pleasing de-rained results. In this paper, we present a reference-guided de-raining filter, a transformer module that enhances the de-raining results using a clean reference image as guidance. We leverage the capabilities of the proposed module to refine further the images de-rained by existing methods. We validate our method on three datasets and show that our module can improve the performance of existing prior-based, CNN-based, and transformer-based approaches.

Method

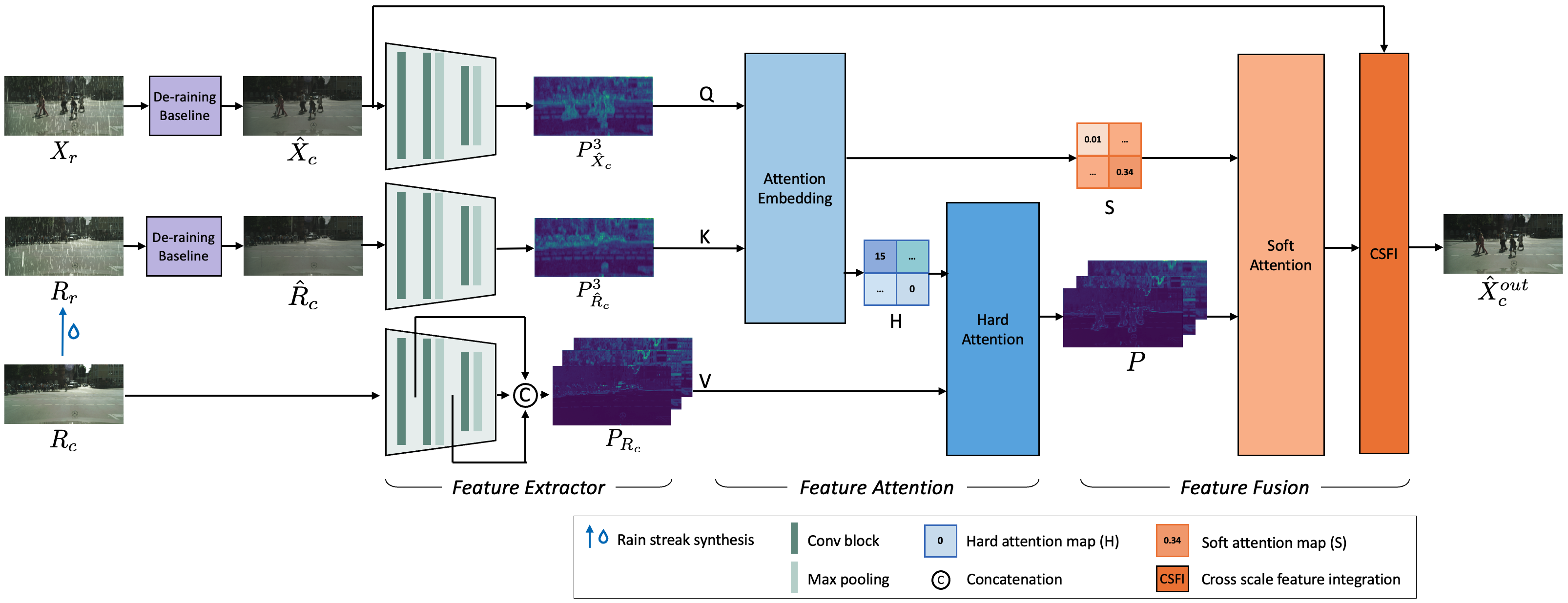

Overview of the proposed reference-guided transformers for de-raining enhancement. We obtain de-rained results from de-raining baseline model. De-rained image \(\hat X_c\) , de-rained reference image \(\hat R_c\) and clean reference image \(R_c\) are input of our pipeline. Firstly, they are projected into feature space in Feature Extractor module, resulting \(P^3_{\hat X_c}\) , \(P^3_{\hat R_c}\) and \(P^3_{ X_c}\). Feature Attention module will further calculate relevance between query \(P^3_{\hat X_c}\) and key \(P^3_{\hat R_c}\). And Output useful feature \(P\) taking \(P_{R_c}\) as the value according to the hard attention map \(H\). \(P\) and soft attention map \(S\) are compensated to de-rained image \(\hat X _c\) to get the pipeline output \(\hat X_c ^{out}\) .

Results

In this section, we present results obtained on the Cityscapes-Rain